There’s no telling how the history of science might have changed if James Watson had had more fun with the binoculars he bought in 1940. He was only 12, but he bought them with his own money, and he had plenty to spend, having just won $100—over $2,300 at 2025 rates—on the radio game show Quiz Kids.

[time-brightcove not-tgx=”true”]

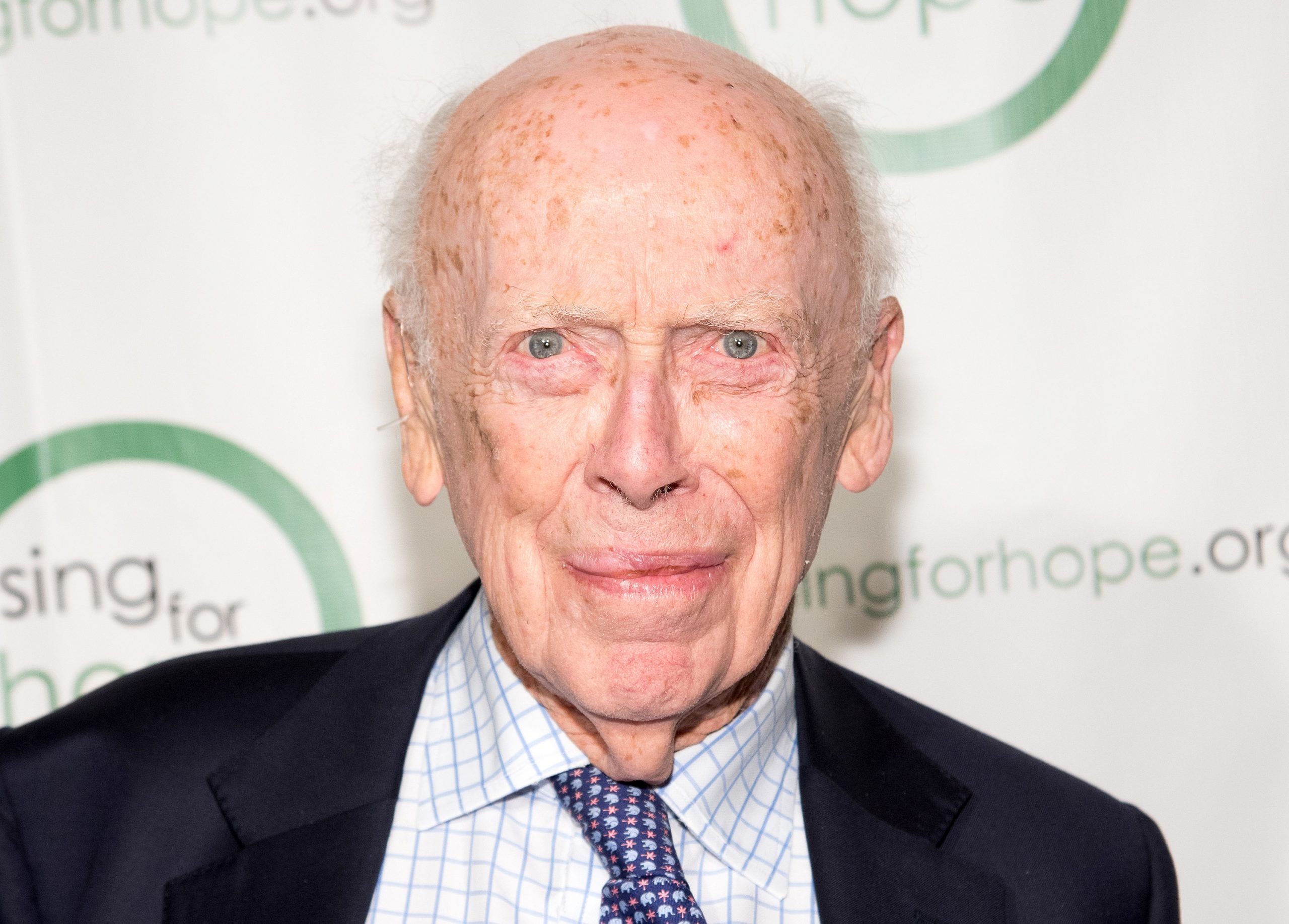

In retrospect, of course, that early win seems portentous, given the fact that that James Watson, who died on Nov. 6, at age 97, of an unspecified cause, was the same James Watson who would go on to win a share of the 1962 Nobel Prize—along with Francis Crick and Maurice Wilkins—as the co-discoverer of the ladder-like, double helix structure of DNA. He would later serve as director of Cold Spring Harbor Laboratory in New York, transforming it into the leading research institution it became, and would redefine the college science text—and shape an entire field of study—with two landmark books, including 1965’s Molecular Biology of the Gene.

In 1940, however, Watson was not interested in molecular biology, and he certainly did not know what a double helix was. What he did love was bird-watching, a passion he had picked up from his father, which was why he bought the binoculars. But birding didn’t stick. Watson entered the University of Chicago in 1943 at age 15 as part of the school’s gifted youngster program and earned his B.S. in zoology, and later a PhD from Indiana University. After reading the 1944 book What is Life? by polymath Erwin Schrödinger, who had strayed from his customary physics paddock into the world of molecular biology, however, Watson decided that that might be the right field for him.

History would record that it was—that his extraordinary skills were best served by exploring and explaining the molecular fundamentals of all biology and, in the process, helping to exponentially expand humanity’s understanding of itself. But history would be left to wonder if Watson the person—not Watson the scientist—might have been better served by remaining in the gentler company of animals, if he might have become gentler, better, more decent himself. Because over the course of his life, he slowly became profoundly indecent.

If it’s true, as former Secretary of Defense Donald Rumsfeld once said, that a country goes to war with the army it has, not the army it may wish it had, so too is it true that a culture gets the heroes it gets, not the ones it would prefer. So you can’t have Charles Lindbergh without the Nazi sympathies and antisemitism. You can’t have George Washington and Thomas Jefferson without the slave-owning. And you can’t have Nobel Laureate Watson without his 2007 observation that he was “inherently gloomy” about the future of Africa, because “social policies are based on the fact that their intelligence is the same as ours — whereas all the testing says not really.”

You can’t have Watson without his comment that same year that “some anti-Semitism is justified. Just like some anti-Irish feeling is justified. If you can’t be criticized, that’s very dangerous. You lose the concept of a free society.”

You can’t have Watson without the 2000 lecture in which he argued that thin people are harder working than fat people because they’re sadder and thus more driven, and that, “Whenever you interview fat people, you feel bad, because you know you’re not going to hire them.” And you can’t have him without his dangerously eugenic belief, expressed in a 2003 documentary, that, “If you are really stupid, I would call that a disease. The lower 10 percent who really have difficulty, even in elementary school. So I’d like to get rid of that.”

All of that, like it or not, is part of the paradox that was James Watson.

The route Watson took from his South Side Chicago childhood to the Nobel banquet in Stockholm in 1962, was surprisingly quick. In 1948, when he was just 20, he joined a group at Indiana University studying bacteriophages, or viruses that infect bacteria. Investigating viruses means investigating genes—since the way a virus hijacks a cell is by forcing it to replicate the viral genome in place of the host’s. At the time, genes were thought to be self-replicating proteins (in fact, it’s genes that code for the production of proteins) and DNA was thought to be nothing more than what early 20th century biophysicist Max Delbrück described as a “stupid tetranucleotide,” a sort of inert physical scaffold on which proteins could be built.

The most powerful tool available to study genetics at the time was x-ray diffraction, a process that involves beaming x-rays through crystallized proteins and other structures and analyzing the scatter pattern that results. Do it right and you can effectively reverse engineer the crystal in much the way looking at a shadow makes it possible to identify the thing casting it. In September of 1950, Watson moved to the University of Copenhagen and during his time there, attended a seminar in Naples, where he met Wilkins and got his first look at an x-ray diffraction image of crystalline DNA. He then moved to the University of Cambridge’s Cavendish Laboratory, where he met Crick and they began exploring the DNA structure more deeply.

In 1951, after a year of intensive work, they thought they had solved it. They hadn’t. DNA, Watson and Crick confidently announced, was a triple-stranded—not double-stranded—structure, with the base pairs of nitrogenous molecules that make up the rungs of the DNA ladder on the outside, not the inside. The Nobel Prize website still decorously refers to that early model as “unsatisfactory.” Other, more-candid commentators, label it “a disaster.” Whatever it was, it was wrong.

The breakthrough did not come until 1953, when Watson visited Wilkins at King’s College in London, and Wilkins showed him a new x-ray crystallography image of DNA. The image was made by PhD student Raymond Gosling, who was working for Rosalind Franklin, a gifted chemist and crystallographer who also worked at the college. Watson was dazzled.

“The instant I saw the picture my mouth fell open and my pulse began to race,” Watson wrote in his 1968 book, The Double Helix. “The pattern was unbelievably simpler than those obtained previously. Moreover, the black cross of reflections which dominated the picture could only arise from a helical structure.”

Critics have argued that Watson, Crick and Wilkins effectively stole Franklin’s work, especially since they later also came into possession of some of the data she had derived by analyzing the image. They did not—but nor did they cover themselves in glory.

Gosling, who had been working for Franklin when he created the picture, was now working for Wilkins, who thus had legitimate access to his work. What’s more, Franklin’s data was not confidential, but rather was readily available and had been passed to Watson and Crick by other researchers who knew that they were exploring the structure of DNA. Informal protocol did call for Watson, Crick and Wilkins to tell Franklin that they were working with her material and to seek her approval, which they did not do; that was a breach of professional courtesy, however, not scientific ethics. Most important, Franklin’s data was raw; it required far more work and far more independent analysis before it could reveal the double helix. Watson, Crick and Wilkins did that work—and they did it well.

In the April 25, 1953 issue of Nature, three separate papers were published: one by Watson and Crick on the structure of DNA; one by Wilkins and his colleagues focusing on more precise components of the overall structure Watson and Crick had described; and one by Franklin and Gosling on the interaction of water and a salt known as sodium thymonucleate within the DNA—findings drawn from their analysis of their crystallography picture.

But it was Watson’s, Crick’s and Wilkins’ work alone that was recognized by the Nobel committee in 1962. In fairness, that was partly because they took a much more comprehensive approach to the work than Franklin did, and partly because Franklin died, of ovarian cancer, in 1958, and the Nobel Prize is not awarded posthumously. But very much in fairness too, it’s likely that none of the men would have accomplished what they did—at least not when they did it—without building on the foundation Franklin laid for them.

Crick and Wilkins, who were both born in 1916, both died in 2004, and that left Watson to serve as the sole—decidedly flawed—representative of their legacy. In January, 2019, 11 years after he was named Chancellor Emeritus of Cold Spring Harbor Laboratory, he was stripped of that title when a new documentary, PBS’s Decoding Watson, was released. Asked if his views on race and IQ had changed in the intervening decade, he was starkly, darkly clear.

“No, not at all,” he said. “I would like for them to have changed, that there be new knowledge which says your nurture is much more important than nature. But I haven’t seen any knowledge. And there’s a difference on the average between blacks and whites on I.Q. tests. I would say the difference is, it’s genetic.”

In the #MeToo era, his comments on women have gotten a second look—and have not fared well. In the same 2003 documentary in which he labeled stupidity a disease, he observed that it might not be such a bad idea to engineer genes for human beauty. “People say it would be terrible if we made all girls pretty,” he said. “I think it would be great.” At a conference in 2012 in which he was asked about women in science, he said, “I think having all these women around makes it more fun for the men but they’re probably less effective.”

A look back at his much earlier observations on women give the lie to the idea that such comments might just be the out-of-touch beliefs of a very old man. In The Double Helix, which was published the year Watson turned 40, he took aim at—and no pity on—Franklin. He referred to her throughout the text by the demeaning diminutive “Rosy,” which was not how she referred to herself, and his description of her dripped with patriarchal condescension:

“Though her features were strong, she was not unattractive and might have been quite stunning had she taken even a mild interest in clothes. This she did not. There was never lipstick to contrast with her straight black hair, while at the age of thirty-one her dresses showed all the imagination of English bluestocking adolescents.” He then offered a succinct prescription for how he and his colleagues might have resolved the tensions that existed in the lab between Franklin and the men: “Clearly Rosy had to go or be put in her place.”

In 2014, Watson sold his Nobel Prize—the only Laureate ever to do such a thing—raising $4.1 million, in part, he said, because his comments on race had caused him to become an “unperson,” and his speaking engagements had dried up. His very name had indeed become radioactive in the community that once embraced him: In 2018, biologist Eric Lander of MIT offered a formal toast to Watson for his work as head of the Human Genome Project from 1990 to 1992, and was clapped back by his colleagues—loudly and angrily enough that he issued a formal apology.

“I reject [Watson’s] views as despicable,” Lander wrote in an open statement. “They have no place in science, which must welcome everyone. I was wrong to toast, and I’m sorry.’’

Other amends have been made as well. Franklin is increasingly mentioned in the same breath as Watson, Crick and Wilkins when the history of DNA science comes up, and in 2019, the European Space Agency announced that it had named its new Mars rover after her. “It’s fitting that the robot bearing her name will search for the building blocks of life on Mars, as she did so on Earth through her work on DNA,” said Alice Bunn, the international director of the United Kingdom Space Agency, when the announcement was made.

Reasonable people can—and surely will—disagree on whether it will ever be possible to applaud the science Watson helped give the world without implicitly endorsing the ugliness with which he helped to darken it. While science values the binary—established proofs and truths versus untruths and unknowns—much of the rest of life is messier, more ambiguous. Watson’s work opened the door to modern genomic science, to our current understanding of the genetic roots of disease, to technologies like CRISPR that allow us to edit some of those diseases out of out our biological software—to the very understanding that, paradoxically, helps debunk his ideas of racial differences in intellect.

Cold Spring Harbor, meantime, continues to thrive in part because of the work Watson did in his years as its director to expand the scope of its research and its sources of funding, and Molecular Biology of the Gene is now in its seventh edition, essential reading even 60 years after its initial publication. In life, Watson passed up the chance to unsay—or at least walk back—the inflammatory things he said, and now he no longer has that option. So his words will endure every bit as much as his work does. It is a fraught legacy—but it’s the one he chose.