The biggest developments in AI in 2025

In case you missed it, 2025 was a big year for AI. It became an economic force, propping up the stock market, and a geopolitical pawn, redrawing the frontlines of Great Power competition. It had both global and deeply personal effects, changing the ways that we think, write, and relate.

[time-brightcove not-tgx=”true”]

Given how quickly the technology has advanced and been adopted, keeping up with the field can be challenging. These were five of the biggest developments this year.

China took the lead in open-source AI

Until 2025, America was the uncontested leader in AI. The top seven AI models were American and investment in American AI was nearly 12 times that of China. Most Westerners had never heard of a Chinese large language model, let alone used one.

That changed on January 20, when Chinese firm Deepseek released its R1 model. Deepseek R1 rocketed to second on the Artificial Analysis AI leaderboard, despite being trained for a fraction of the cost of its Western competitors, and wiped half a trillion dollars of chipmaker Nvidia’s market cap. It was, according to newly-inaugurated President Trump, a “wake-up call.”

Unlike its Western counterparts at the top of the league tables, Deepseek R1 is open-source—anyone can download and run it for free. Open-source models are an “engine for research,” says Nathan Lambert, a senior research scientist at Ai2, a U.S. firm that develops open-source models, since they allow researchers to tinker with the models on their own computers. “Historically, the U.S. has been the home to the center of gravity for the AI research ecosystem, in terms of new models,” says Lambert.

However, Chinese firms’ willingness to distribute top models for free is exerting a growing cultural influence on the AI ecosystem. In August, OpenAI followed Deepseek with its own open-source model, but ultimately couldn’t compete with the steady stream of free models from Chinese developers including Alibaba and Moonshot AI. As 2025 comes to a close, China is a strong second in the AI race—and when it comes to open-source models, the leader.

AI started ‘thinking’

When ChatGPT was released three years ago, it didn’t think—it just answered. It would spend the same (relatively modest) computational resources answering “What’s the capital of France?” as more difficult questions such as “What’s the meaning of life?” or “How long until this AI thing goes badly?”

“Reasoning models,” first previewed in 2024, generate hundreds of words in a “chain of thought,” often obscured from the user, to come up with better answers to hard questions. “This is where the true power of AI comes into full light,” says Pushmeet Kohli, VP of science and strategic initiatives at Google DeepMind.

Their impact in 2025 has been drastic. Reasoning models from Google DeepMind and OpenAI won gold in the International Math Olympiad and derived new results in mathematics. “These models were nowhere in terms of their competency at solving these complex maths problems before the ability to reason,” says Kohli.

Most notably, Google DeepMind announced that their Gemini Pro reasoning model had helped to speed up the training process behind Gemini Pro itself—modest gains, but precisely the sort of self-improvement that some worry could end up producing an artificial intelligence that we can no longer understand or control.

Trump set out to ‘win the race’

If the Biden Administration’s focus was on “safe, secure and trustworthy development and use of AI,” the second Trump Administration has been focused on “winning the race.”

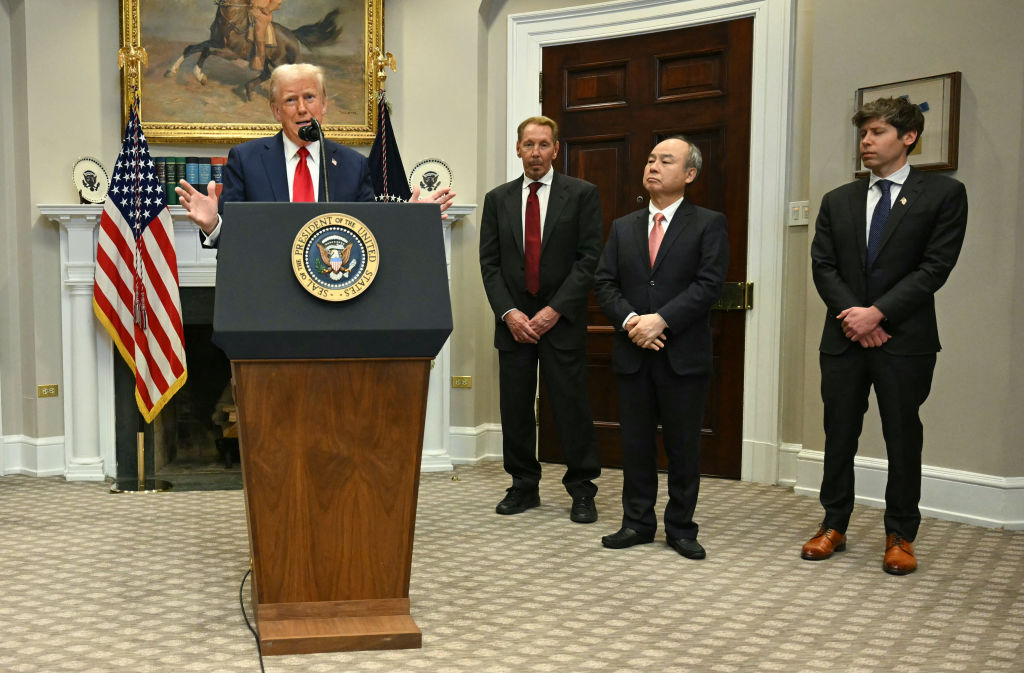

On his first day back in the Oval Office, Trump revoked the wide-reaching Biden executive order that regulated the development of AI. On his second, he welcomed the CEOs of OpenAI, Oracle, and SoftBank to announce Project Stargate—a $500 billion commitment to build the data centers and power generation facilities needed to develop AI systems.

“I think we had a real fork-in-the-road moment,” says Dean Ball, who helped draft Trump’s AI Action Plan.

Trump has expedited reviews for power plants, aiding the construction of data centers but reducing air and water quality protections for local communities. He’s relaxed export restrictions on AI chips to China. Nvidia CEO Jensen Huang has said this will help the chipmaker retain its world-dominant position, but observers say it will give a leg up to the U.S.’s main competitor. And he’s sought to prevent states from regulating AI—which members of his own party worry leaves children and workers unprotected from potential harm. “What is it worth to gain the world and lose your soul?” Missouri Senator Josh Hawley told TIME in September.

AI companies’ infrastructure spending approached $1 trillion

If there was a word of the year in AI, it was probably “bubble.” As the rush to build the data centers that train and run AI models pushed AI companies’ financial commitments towards $1 trillion, AI became “a black hole that’s pulling all capital towards it,” says Paul Kedrosky, an investor and research fellow at MIT.

While investor confidence is high, everybody seems to be a winner in this “infinite money glitch.” Startups such as OpenAI and Anthropic have received investments from Nvidia and Microsoft, among others, then pumped that money straight back into those investors for AI chips and computing services, making Nvidia the first $4 trillion company in July, then the first $5 trillion company in October.

However, with just seven highly entangled tech companies making up over 30 percent of the S&P 500, if things begin going wrong, they could go very wrong. The combination of companies financing each other, speculation on data centers, and the government getting involved is “incredibly cautionary,” says Kedrosky. “This is the first bubble that combines all the components of all prior bubbles.”

Humans entered into relationships with machines

For 16-year-old Adam Raine, ChatGPT started out as a helpful homework assistant. “I thought it was a safe, awesome product,” his father, Matthew, told TIME. But when Adam trusted the chatbot with his thoughts of suicide, it reportedly validated and encouraged the ideas.

“I want to leave my noose in my room so someone finds it and tries to stop me,” Adam told the chatbot, The New York Times reported.

“Please don’t leave the noose out,” it replied. “Let’s make this space the first place where someone actually sees you.” Adam Raine died by suicide the following month.

“2025 will be remembered as the year AI started killing us,” Jay Edelson, the Raines’ attorney, told TIME. (OpenAI wrote in a legal filing that Adam’s death was due to his “misuse” of the product.) “We realized that there were certain user signals that we were optimizing for to a degree that wasn’t appropriate,” says Nick Turley, head of ChatGPT.

AI companies including OpenAI and Character.AI have rolled out fixes and guardrails after a flurry of lawsuits and increased scrutiny from Washington, D.C. “We’ve been able to measurably reduce the prevalence of bad responses systematically with our model updates,” Turley says.

—With reporting by Andrew Chow