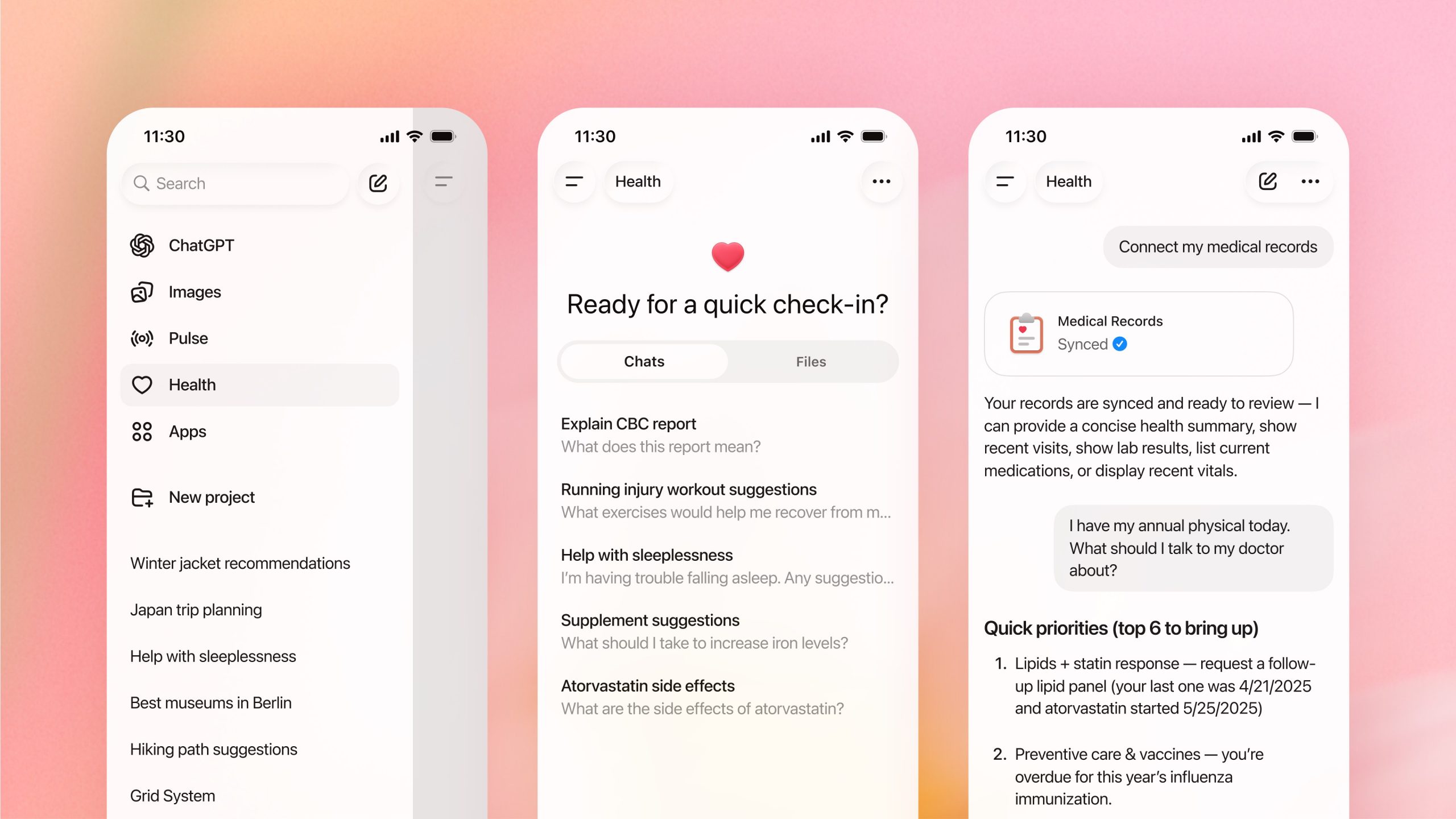

Your AI doctor’s office is expanding. On Jan. 7, OpenAI announced that over the coming weeks, it will roll out ChatGPT Health, a dedicated tab for health that allows users to upload their medical records and connect apps like Apple Health, the personalized health testing platform Function, and MyFitnessPal.

According to the company, more than 40 million people ask ChatGPT a health care-related question every day, which amounts to more than 5% of all global messages on the platform—so, from a business perspective, leaning into health makes sense. But what about from a patient standpoint?

[time-brightcove not-tgx=”true”]

“I wasn’t shocked to hear this news,” says Dr. Danielle Bitterman, a radiation oncologist and clinical lead for data science and AI at Mass General Brigham Digital. “I do think that this speaks to an unmet need that people have regarding their health care. It’s difficult to get in to see a doctor, it’s nowadays hard to find medical information, and there is, unfortunately, some distrust in the medical system.”

We asked experts whether turning over your health data to an AI tool is a good idea.

What is ChatGPT Health?

The new feature will be a hub where people can upload their medical records, including lab results, visit summaries, and clinical history. That way, when you ask the bot questions, it will be “grounded in the information you’ve connected,” the company said in its announcement. OpenAI suggests asking questions like: “How’s my cholesterol trending?” “Can you summarize my latest bloodwork before my appointment?” “Give me a summary of my overall health.” Or: “I have my annual physical tomorrow. What should I talk to my doctor about?”

Read More: 9 Doctor-Approved Ways to Use ChatGPT for Health Advice

Users can also connect ChatGPT to Apple Health, so the AI tool has access to data like steps per day, sleep duration, and number of calories burned during a workout. Another new addition is the ability to sync with data from Function, a company that tests for more than 160 markers in blood, so that ChatGPT has access to lab results as well as clinicians’ health suggestions. Users can also connect MyFitnessPal for nutrition advice and recipes, and Weight Watchers for meal ideas and recipes geared toward those on GLP-1 medications.

OpenAI, which has a licensing and technology agreement that allows the company to access TIME’s archives, notes that Health is designed to support health care—not replace it—and is not intended to be used for diagnosis or treatment. The company says it spent two years working with more than 260 physicians across dozens of specialities to shape what the tool can do, as well as how it responds to users. That includes how urgently it encourages people to follow-up with their provider, the ability to communicate clearly without oversimplifying, and prioritizing safety when people are in mental distress.

Is it safe to upload your medical data?

OpenAI partnered with b.well, a data connectivity infrastructure company, to allow users to securely connect their medical records to the tool. The Health tab will have “enhanced privacy,” including a separate chat history and memory feature than other tabs, according to the announcement. OpenAI also said that “conversations in Health are not used to train our foundation models,” and Health information won’t flow into non-Health chats. Plus, users can “view or delete Health memories at any time.”

Still, some experts urge caution. “The most conservative approach is to assume that any information you upload into these tools, or any information that may be in applications you otherwise link to the tools, will no longer be private,” Bitterman says.

No federal regulatory body governs the health information provided to AI chatbots, and ChatGPT provides technology services that are not within the scope of HIPAA. “It’s a contractual agreement between the individual and OpenAI at that point,” says Bradley Malin, a professor of biomedical informatics at Vanderbilt University Medical Center. “If you are providing data directly to a technology company that is not providing any health care services, then it is buyer beware.” In the event that there was a data breach, ChatGPT users would have no specific rights under HIPAA, he adds, though it’s possible the Federal Trade Commission could step in on your behalf, or that you could sue the company directly. As medical information and AI start to intersect, the implications so far are murky.

“When you go to your health care provider and you have an interaction with them, there’s a professional agreement that they’re going to maintain this information in a confidential manner, but that’s not the case here,” Malin says. “You don’t know exactly what they are going to do with your data. They say that they’re going to protect it, but what exactly does that mean?”

Read More: The 4 Words That Drive Your Doctor Up the Wall

When asked for comment on Jan. 8, OpenAI directed TIME to a post on X from chief information security officer Dane Stuckey. “Conversations and files in ChatGPT are encrypted by default at rest and in transit as part of our core security architecture,” he wrote. “For Health, we built on this foundation with additional, layered protections. This includes another layer of encryption…enhanced isolation, and data segmentation.” He added that the changes the company has made “give you maximum control over how your data is used and accessed.”

The question every user has to grapple with is “whether you trust OpenAI to keep to their word,” says Dr. Robert Wachter, chair of the department of medicine at the University of California, San Francisco, and author of A Giant Leap: How AI Is Transforming Healthcare and What That Means for Our Future.

Does he trust it? “I sort of do, in part because they have a really strong corporate interest in not screwing this up,” he says. “If they want to get into sensitive topics like health, their brand is going to be dependent on you feeling comfortable doing this, and the first time there’s a data breach, it’s like, ‘Take my data out of there—I’m not sharing it with you anymore.’”

Wachter says that if there was information in his records that could be detrimental if it leaked—like a past history of drug use, for example—he would be reluctant to upload it to ChatGPT. “I’d be a little careful,” he says. “Everybody’s going to be different on that, and over time, as people get more comfortable, if you think what you’re getting out of it is useful, I think people will be quite willing to share information.”

The risk of bad information

Beyond privacy concerns, there are known risks of using large-language-model-based chatbots for health information. Bitterman recently co-authored a study that found that models are designed to prioritize being helpful over medical accuracy—and to always supply an answer, especially one that the user is likely to respond to. In one experiment, for example, models that were trained to know that acetaminophen and Tylenol are the same drug still produced inaccurate information when asked why one was safer than the other.

“The threshold of balancing being helpful versus being accurate is more on the helpfulness side,” Bitterman says. “But in medicine we need to be more on the accurate side, even if it’s at the expense of being helpful.”

Plus, multiple studies suggest that if there’s missing information in your medical records, models are more likely to hallucinate, or produce incorrect or misleading results. According to a report on supporting AI in health care from the National Institute of Standards and Technology, the quality and thoroughness of the health data a user gives a chatbot directly determines the quality of the results the chatbot generates; poor or incomplete data leads to inaccurate, unreliable results. A few common traits help increase data quality, the report notes: correct, factual information that’s comprehensive, complete, and consistent, without any outdated or misleading insights.

In the U.S., “we get our health care from all different sites, and it’s fragmented over time, so most of our health care records are not complete,” Bitterman says. That increases the likelihood that you’ll see errors where it’s guessing what happened in areas where there are gaps, she says.

The best way to use ChatGPT Health

Overall, Wachter considers ChatGPT Health a step forward from the current iteration. People were already using the bot for health queries, and by providing it with more context via their medical records—like a history of diabetes or blood clots—he believes they’ll receive more useful responses.

“What you’ll get today, I think, is better than what you got before if all your background information is in there,” he says. “Knowing that context would be useful. But I think the tools themselves are going to have to get better over time and be a little bit more interactive than they are now.”

When Dr. Adam Rodman watched the ChatGPT Health introductory video, he was pleased with what he saw. “I thought it was pretty good,” says Rodman, a general internist at Beth Israel Deaconess Medical Center, where he leads the task force for integration of AI into the medical school curriculum, and an assistant professor at Harvard Medical School. “It really focused on using it to help understand your health better—not using it as a replacement, but as a way to enhance.” Since people were already using ChatGPT for things like analyzing lab results, the new feature will simply make doing so easier and more convenient, he says. “I think this more reflects what health care looks like in 2026 rather than any sort of super novel feature,” he says. “This is the reality of how health care is changing.”

Read More: 10 Questions You Should Always Ask at Doctors’ Appointments

When Rodman counsels his patients on how to best use AI tools, he tells them to avoid health management questions, like asking the bot to choose the best treatment program. “Don’t have it make autonomous medical decisions,” he says. But it’s fair game to ask if your doctor could be missing something, or to explore “low-risk” matters like diet and exercise plans, or interpreting sleep data.

One of Bitterman’s favorite usages is asking ChatGPT to help brainstorm questions ahead of a doctor appointment. Augmenting your existing care like that is a good idea, she says, with one clear bonus: “You don’t necessarily need to upload your medical records.”